Two key digital technologies that I use in all of my classrooms, regardless of topic, are Google Docs and Google Forms. I use each of these technologies in many ways. However, I use Google Docs every semester to organize my office hour meetings and I use Google Forms every semester to solicit feedback from my students to gauge gaps in knowledge, class preferences, and how I am doing as an instructor before end-of-course institutional evaluation surveys. Further, I use these two tools in conjunction with each other when creating a participation self-evaluation in order to enable students to identify how they are participating in the course. I find both of these tools incredibly productive for managing my pedagogy, and in this section, I hope to demonstrate how useful they can be for other instructors.

Further, I find the modules function in the Canvas LMS extremely helpful for students’ (and my own) management of course materials and deadlines. The final section of my innovations and reflections explain how and why I set up my course site the way I do.

Office hours

In my pedagogy, I emphasize the necessity of individual instruction in order to identify and meet specific student needs. This individual instruction is delivered through office hour meetings. In order to sign up for these meetings, I set up a Google Doc, shown below, in which the sharing settings are set so that anyone with the link can edit the document. The Google Doc enables students to identify the available times for them to meet and to sign up or cancel meetings at will according to their needs. This practice helps prevent students coming to “walk-in,” first-come-first-served hours at the same time as one another while still enabling them the freedom to sign up or cancel last minute. Additionally, the Google Doc allows me to forecast which students will attend, usually to discuss recent projects, so that I can re-familiarize myself with their work ahead of time.

Allowing students to choose when they need individual instruction and how often they need it helps to instill their agency in their education past just what is required of them, especially for often required courses like the composition courses I teach. I do require a first meeting early in the semester of my first-year students, and I have found that this initial meeting makes students feel as if they can come to office hours to get help, with most students coming back several times throughout the semester (and sometimes after their semester as my student is over).

Broadly, office hours are a sort of unspoken requirement of higher education that many students may not feel comfortable with, especially for classes much bigger than any I teach. However, in demonstrating the usefulness of these meetings alongside how these interactions are often less scary than anticipated, students’ willingness to attend office hours has the potential to transfer into how they approach their education in other classes as well.

Feedback Surveys

Each class and their preferences for the delivery of class materials can widely vary. In order to solicit feedback on student preferences, I have created informal and anonymous feedback surveys to collect information on what is engaging and what is not and suggestions on how to improve the course and my own pedagogy. If delivered early in the semester, lesson planning for future units can be informed by that particular class’ preferences. If delivered later in the semester, they can be used to assess how students are feeling about their progress and ability to succeed in the class.

For this discussion of the possible usefulness of implementing feedback surveys, I will be featuring anonymous student responses from two semesters, a fall semester and a spring semester, of ENGL 15, an introductory writing course. I did adjust the questions in the survey between the semester, partly by accident as I did not make a direct copy of the surveys in between the semesters. Both surveys were given for student responses at the beginning of the third unit out of four across the semester, and this effectively made the submissions come in a little after the middle of the semester.

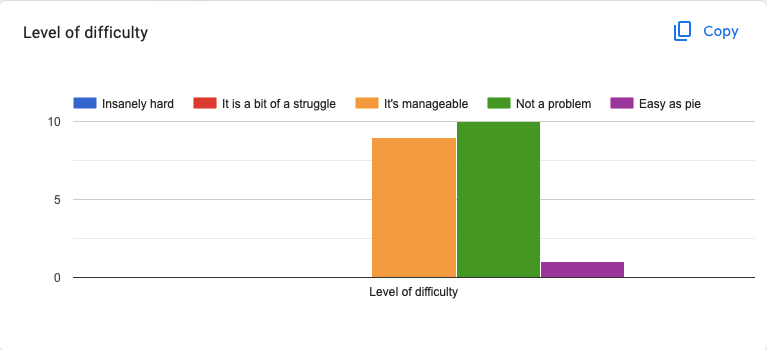

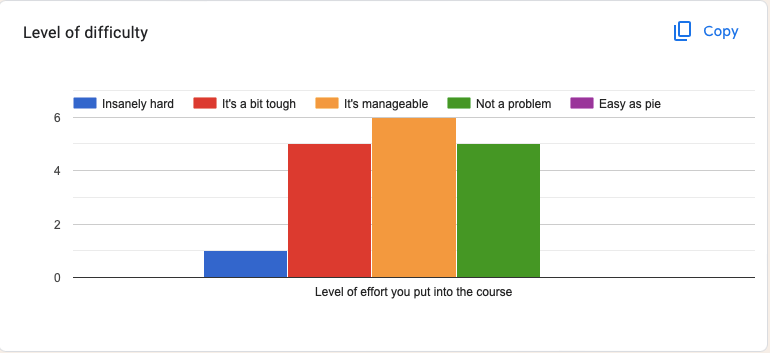

First, I begin these feedback surveys with a question to generally assess how difficult the students are finding the class. The available survey options include “insanely hard,” “it’s a bit tough,” “it’s manageable,” “not a problem,” and “easy as pie.” I have included images of how the question would appear to student responders, the chart of scores for a fall semester of students, and a chart of scores for a spring semester of students. These charts’ main purpose is to inform me on how student attitudes of how the class is going may inform the written feedback that I solicit later in the survey.

Though I used the same syllabus for these two semesters, students had a big discrepancy in perceived difficulty of the course. Fall 2022 students overwhelmingly found the course “manageable” or “not a problem,” as show from the graph below. From this trend, I concluded that the format of class instruction I had been using was effective for this group of students.

Comparatively, Spring 2023 students fell across a wider range from “it’s a bit rough” to “not a problem.” From this, I concluded that I needed to spend more time on the subjects that the students later identified struggling with. Further, I concluded that the class would likely benefit from a change in course content delivery, whether it be from different types of activities or different readings.

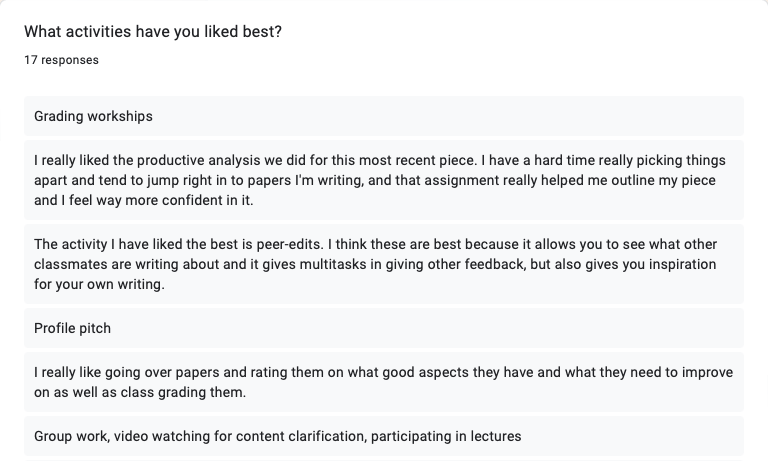

In order to identify what forms of course content delivery is working for the class, I include free response questions about what activities the class liked best. Positive open-ended questions such as “What activities have you liked best?” and “What activities have been the most helpful in developing your writing skills?” allows an instructor to identify specific activities or projects that students prefer compared to other activities or projects. Thus far in the collection of these feedback surveys, the responses to these discussions have by far been the most helpful. If students respond that they are open to group work, that can easily make classroom activities collaborative and more reflexive through the means of discussion. If students respond that they prefer multimodal instruction to simple lecture, that can indicate that resources like videos and mini games can be emphasized to diversify course content delivery. Further, in these two semesters, these positive open-ended questions have affirmed that many students recognize the value of metacognitive composition tasks like peer review and revision. Altogether, these positive open-ended questions serve as the primary usefulness for these surveys.

Fall 2022 identified a strong preference for whole class activities, process work, and in-class writing workshop time. Because of this feedback, as the semester progressed, I tried to spend as much time as possible doing these activities to keep my students feeling as if the course is a manageable difficulty.

For Spring 2023, I based my course content delivery on the preferences of the Fall 2022 class. However, as evidenced by the discrepancies in perceived difficulty level between the two semesters, I should not have done that. Instead, I should have given the students of Spring 2023 a variety of types of course delivery and surveyed them on their preferences earlier than Unit 3. In doing so, I likely would have been able to make the course more manageable for that semester’s students. If I had done so, I would have identified this group of students’ preference for review practice and gamification of course content earlier as exemplified by the screenshot below.

From the comparison of these two semesters’ students’ responses, I quickly learned the necessity of soliciting this sort of feedback early to identify class preferences. This can impact students’ comfort within the classroom, and their comfort levels can then impact their understanding of course content.

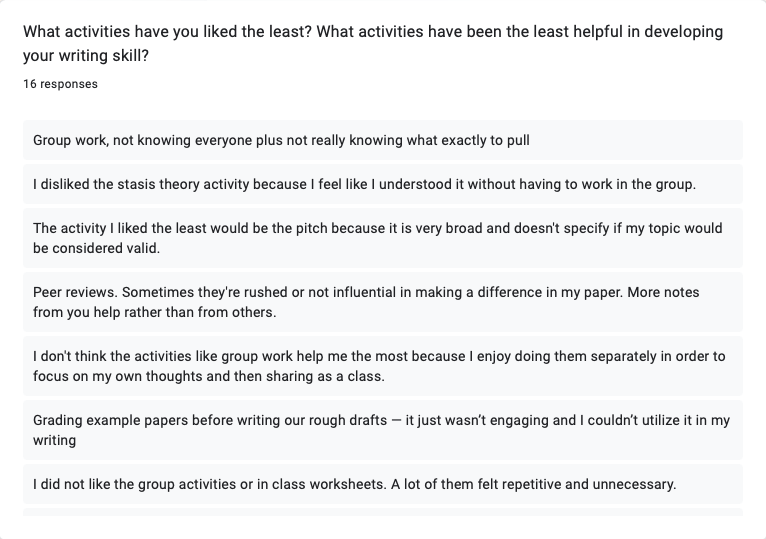

Comparatively, negative open-ended questions such as “What activities have you liked the least? What activities have been the least helpful in developing your writing skills?” may not elicit completely helpful feedback due to students’ chafing against institutional standards that are difficult to adjust at an individual instructor or class level. I did not include this question in the Fall 2022 feedback survey, but I tested it out in the Spring 2023 feedback survey in order to see if students were most resistant to my activities or to the institutional structure of the course. In their feedback, five responses pointed to institutional requirements for the course like peer review, eight responses pointed to activities that I had designed to address learning objectives, and the other three responses were to say that the student did not find any activities unhelpful. Five of the responses about my activities were about this class’s distaste for group work. If I had given this survey earlier in the semester, I could have easily adjusted my in-class activities to avoid group work, and this ability as a product of feedback is where this question is most useful for adjusting pedagogy.

If distributed more regularly, and perhaps made a requirement rather than an optional activity, these surveys could serve as a way to collect information on how students perceive their own participation and progress in the class. For instance, I have included a question that asks “How well do you think you are doing in this course?” The past couple iterations of this survey has not garnered responses that I think indicate anything informative or directional. The question was intended to solicit responses about student participation, but the responses have not reflected that intention. However, the question could be improved with some scaffolding. This scaffolding could include prompting questions such as “What does A-level participation look like, in your own words? Do you feel like your participation has met that, and if so, how?” In the pursuit of allowing students to participate in ways that they find most comfortable or productive, this sort of feedback can perhaps gather ideas for new sorts of participation as well as indicate how comfortable students are in participating in any way.

The feedback is of course situational, and sometimes students suggest adjustments that are outside the institutional parameters set upon widely implemented courses like introductory composition. However, student feedback can be widely informative for learning about the personality of the particular class and thus tailoring the delivery of materials. Just as importantly, students can offer inspiration for new activities around the material and tips for making the course as engaging and manageable as possible.

Perhaps one of the most important benefits of these surveys is the role they can play in establishing a classroom environment in which students feel like they have a critical role in how a classroom runs. Instead of treating students as simply receivers of knowledge, these surveys treat students as authorities on how well the class is going and perhaps how it can be even better and thus creators of knowledge as well.

Participation Student Evaluation

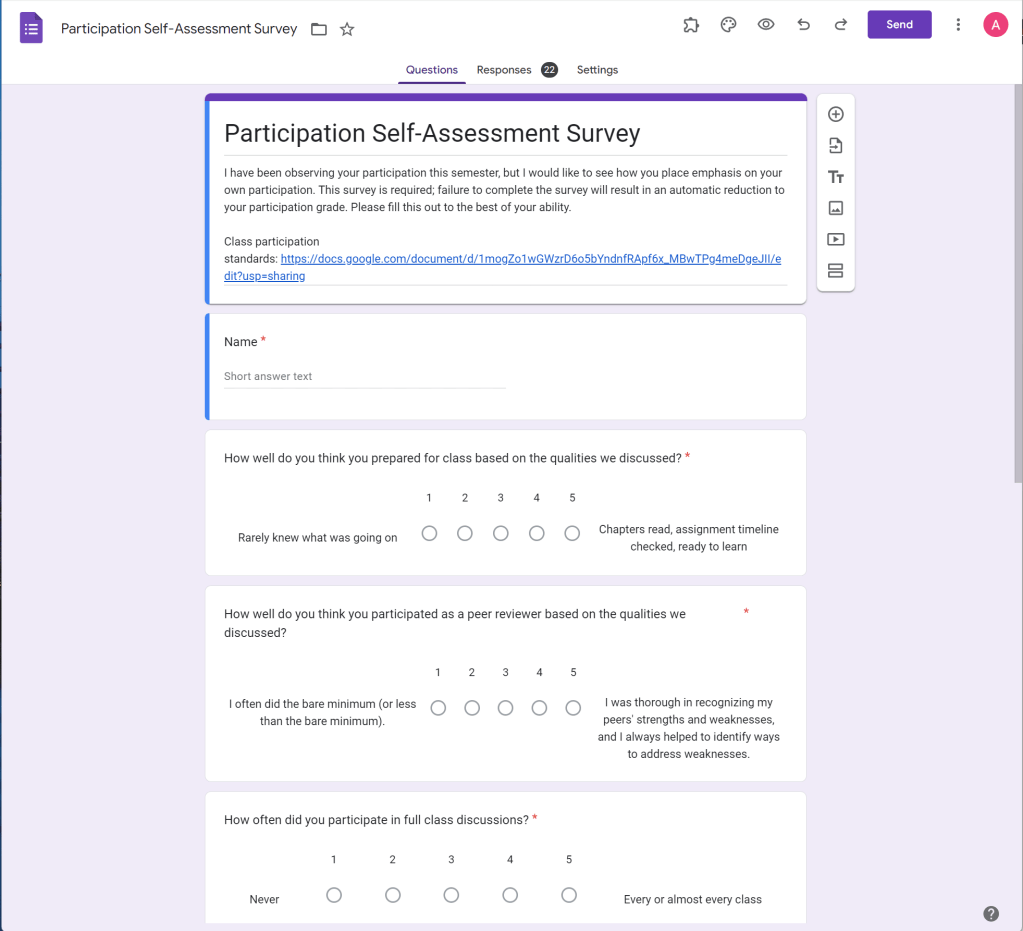

When evaluating the nebulous category of “Active Participation” that features on many syllabi, it is easy to overlook some forms of valuable participation if they are not as evident as asking questions in class or coming to office hours. Because of this, I prefer to allow grading standards alongside my students and then I ask them to evaluate themselves.

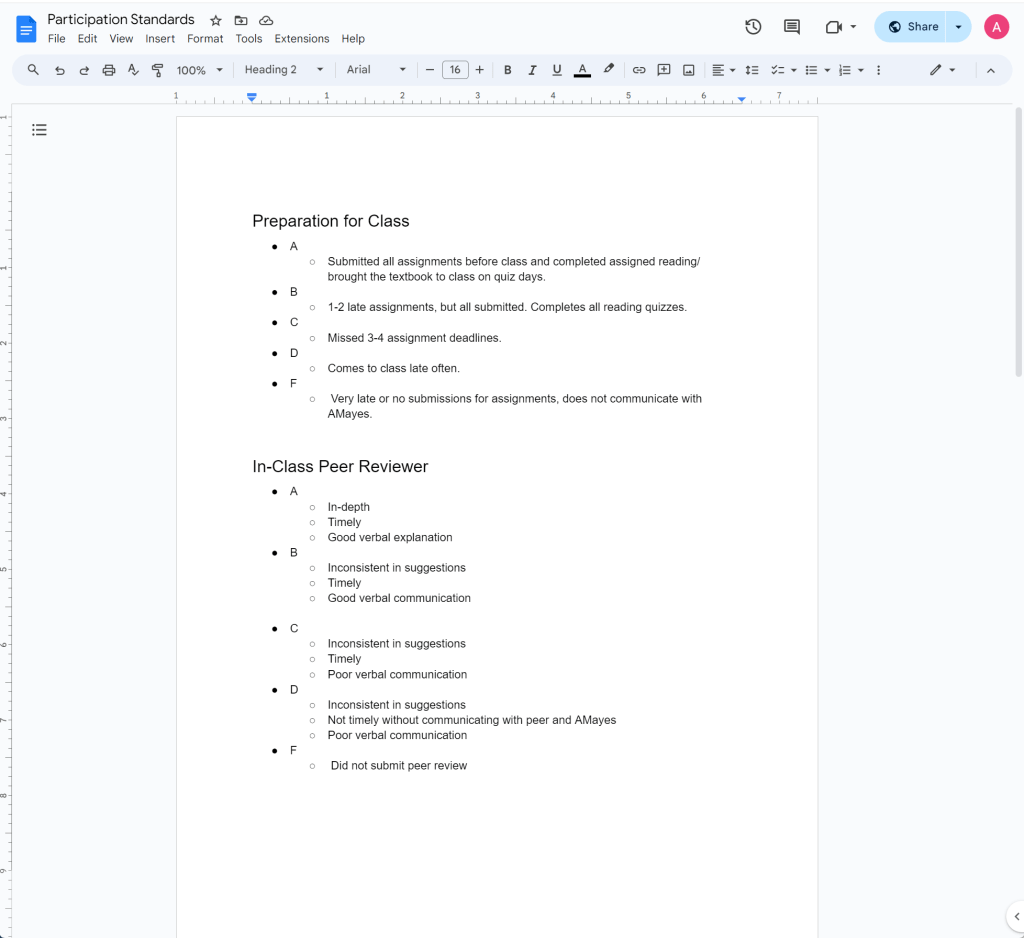

In my Spring 2023 section of Technical Communication, I facilitated a class collaboration to build the course participation standards. The Google Doc was created with six sections to match the six sections of the self-assessment survey. Each of the six sections were given a grading scale and I asked the students to fill in qualities that they felt were appropriate for the assignment of that grade for that section.

After a period of in-class time when the document was filled out, as a class we reorganized the categories to be a clear descending scale to better reflect the grading scale. Sometimes this meant that my intervention produced larger consequences for steps down the grading scale, but mostly my intervention made the intervals down the grading scale less significant.

After collaboratively creating the grading standards, I asked my students to self-assess how their participation stacked up to the class standards. The survey was on a 1-5 scale to reflect the 5-step grading scale. In the future, I would revise the survey to more evenly match the A-F grading scale as some students seemed confused about transferring their assessment from A-F to the flipped and numeric 1-5.

Notably, not every student will accurately represent their participation. Some will fudge their responses to make the negatives of their participation weigh less and some will undersell their less visible forms of participation. These class-generated standards and self-evaluation still requires instructor intervention when it comes to submitting grades to mediate this over- and underselling, but it does give students an idea of how to better participate in clearly outlined ways.

Canvas Modules

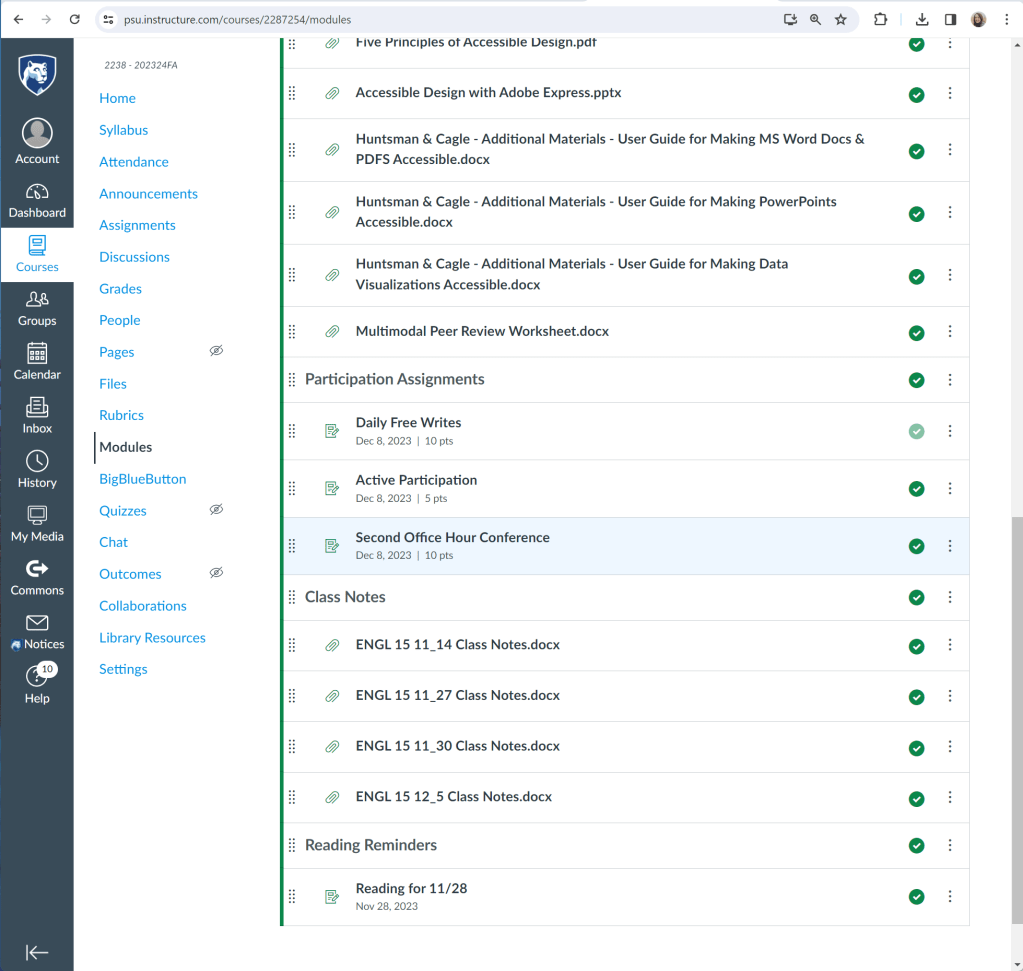

Course content management can be an extremely helpful tool for both students and instructors if done well. In Fall 2023, I was allowed to build an introduction to rhetoric and composition syllabus within programmatic constraints but otherwise how I pleased. In addition to this, I got to design my course Canvas page from scratch. One of my priorities for designing the page is to make deadlines clear and manageable for students. Another priority was to keep all course content organized by project on the front page of the site. Thus, the best way to do this is through Canvas’s Module function. My modules include 5 sections: Progress Work, Files, Participation Assignments, Class Notes, and Reading Reminders.

In the first screenshot of the course Canvas page, the Progress Work and Files sections are visible. The Progress Work section collects the drop boxes for all major cumulative assignments for that particular unit’s project. Due dates and point values are visible here as well. The section is organized in order of due date as well. Then the Files section collects all major assignment files, reference materials, and class PowerPoints. They are added by date and organized loosely as these files are applicable across particular assignments.

The second screenshot shows the Participation Assignments, Class Notes, and Reading Reminders sections. The Participation Assignments section includes the drop boxes for any work completed in class (and the Active Participation semester assignment). For the Class Notes section, I post the notes that I use as lesson plans for student use before every class period. It is important that these files are Word documents in order for students to edit them for their own purposes. I usually use these notes over PowerPoints as I find that students prefer them and they make class more accessible for absent students. Finally, I conclude the module with the Reading Reminders section. These notifications help keep students oriented to the readings they will need to do before class as they will show up on the Canvas calendar function like assignments.

Altogether, I find that creating modules like this one is productive for course design, especially first-year students who may struggle with time management or being proactive in finding necessary course content.